UX Research

Prompt Engineering

UI Design/ Visual Design

User Experience Research

AI Exploration

Competitor Analysis

Prompt Engineering

Data Analysis and Output Evaluation

Visual Design

User Experience Documentation

AI Ethics and Future Considerations

ChatGPT - 3.0/3.5

Midjourney

RunwayML

Rifussion

Figma

Adobe Photoshop

2 Prompt Engineers / Designers

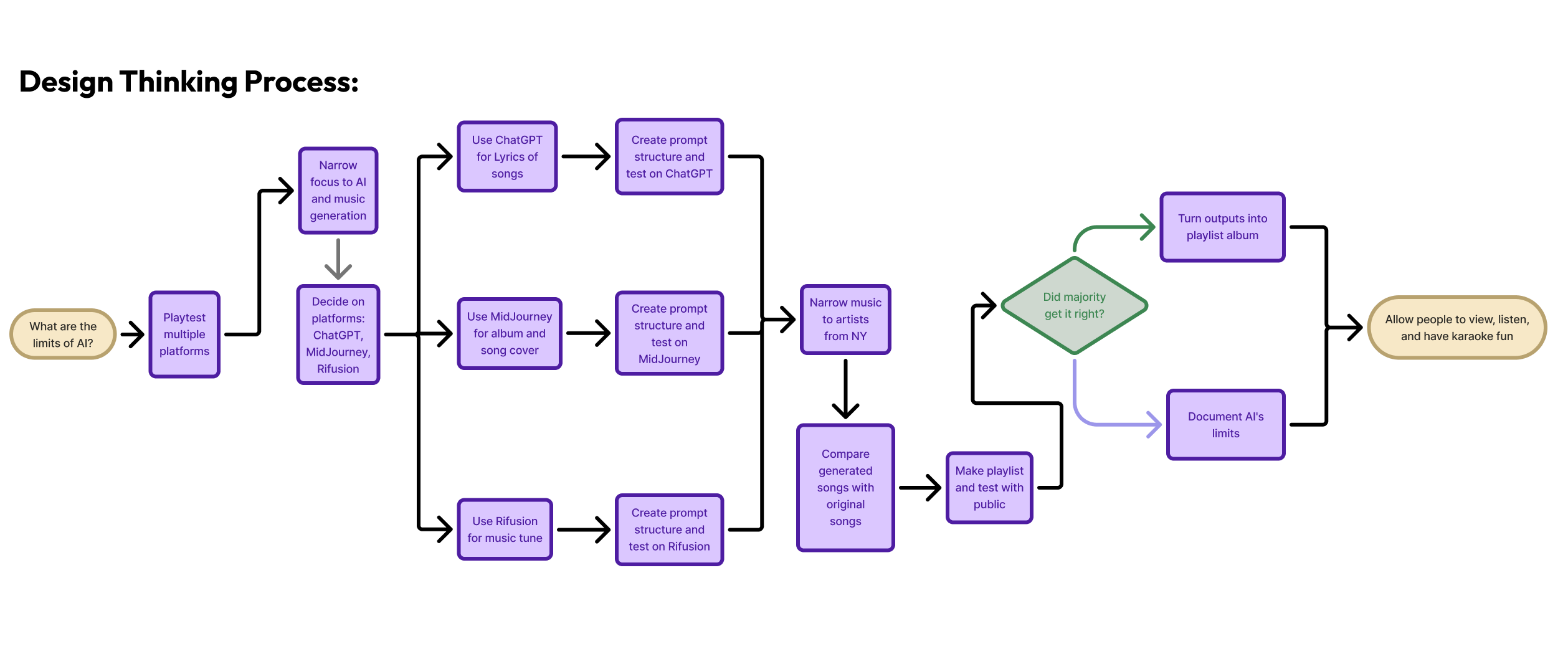

During the peak of public curiosity about AI following the release of ChatGPT, we worked on our project: CYAN (Create Your Album Now). As AI tools, particularly ChatGPT, gained rapid popularity, discussions around their capabilities, biases, and ethical considerations surged. The technology was being widely adopted, but there was a lack of structured exploration into its strengths and limitations, especially from a user-experience and creative perspective.

We recognized an opportunity to analyze AI’s constraints in generating coherent, original, and meaningful responses, particularly in experimental and interactive contexts. The goal was to creatively explore how far generative AI could go in producing music, lyrics, album art, and immersive storytelling through location-based triggers — pushing the boundaries of creativity, coherence, and emotional resonance in AI.

With experimentation, there was one particular niche that we knew we could target: testing the limits of AI through the creation of music. This became the core focus of CYAN — blending songwriting, image generation, and audio tools to co-create albums with the help of AI.

Google Analytics from the past 5 years shows that the current website had a very low percentage of return users (7.8%) and a high bounce rate (41.26%) with the average session duration of less than a minute.

The objective was to design and conduct structured experiments that tested AI’s creative boundaries, logical consistency, and adaptability. We aimed to explore how AI could be used in unexpected ways, what limitations emerged in different contexts, and how users perceived its responses. Additionally, we sought to document findings that could inform designers, developers, and users about the realistic expectations and future potential of AI.

As a co-creator of CYAN, my responsibility was to:

- Engineer and iterate prompts across multiple AI platforms (ChatGPT, Midjourney, Riffusion).Analyze output patterns, hallucinations, and biases.

- Compare AI-generated content with historical and real-life references.

- Design a playful, educational experience that invites audiences to co-create music albums based on time, place, and artist.

Planning & Brainstorming

Competitor Analysis

I realized that music was a niche that could be focused on more as the algorithms weren’t as well trained or tested to generate this from my experiments.

The goal was to highlight the city’s vibrance using modern machine learning tools like GPT, while anchoring AI outputs in specific times and places to evaluate context-awareness.

I suggested actual songs as it is easier for comparison and not just vague versions related to the artist.

🎧 Try It Yourself: HERE — Walk through the NYC-inspired music journey, listen to AI-generated covers, and compare them to the originals.

🎧 Try It Yourself: HERE — Walk through the NYC-inspired music journey, listen to AI-generated covers, and compare them to the originals.

As students, we’ve always been told to try our best to keep up with technology. Looking around at most application usability tests, the one that got us most curious was the application of AI in the field of music — and its future potential. CYAN became our creative response to that curiosity.

The project provided valuable insights into AI’s operational limits, influencing discussions on how AI tools should be integrated responsibly in creative and professional environments. The findings were referenced in conversations around AI ethics and user expectations, helping shape more informed interactions with AI models. It also informed future iterations of AI-driven tools by identifying key areas for improvement, such as enhancing contextual memory and reducing cultural or genre-based biases.

We developed a working prototype of an AI-powered, location-aware music experience rooted in New York’s rich musical history — where users could interact with songs generated by AI and compare them to original artists. The street-level engagement added a real-world dimension to the experiment.